System Testing: 7 Powerful Steps to Master Ultimate Quality Assurance

Ever wonder how software handles real-world chaos without crashing? The secret lies in system testing—a crucial phase that ensures your application works flawlessly from end to end. It’s not just about finding bugs; it’s about guaranteeing reliability, performance, and user satisfaction.

What Is System Testing and Why It Matters

System testing is a high-level software testing phase conducted after integration testing and before acceptance testing. It evaluates the complete, integrated system to verify that it meets specified requirements. Unlike unit or integration tests, which focus on components, system testing looks at the software as a whole—just as users will experience it.

Definition and Core Purpose

System testing involves validating the entire software system against its functional and non-functional requirements. Its primary goal is to ensure that all parts of the application work together seamlessly in a real-world environment.

- It checks both functional behavior (e.g., login, data processing) and non-functional aspects (e.g., speed, security).

- Conducted in a production-like environment to simulate actual usage.

- Performed by testers who are independent of the development team to ensure objectivity.

“System testing is the final checkpoint before software meets the real world. It’s where theory meets reality.” — ISTQB Foundation Level Syllabus

How It Fits in the Software Testing Lifecycle

System testing sits at the heart of the testing pyramid. After unit tests validate individual code modules and integration tests confirm that modules work together, system testing evaluates the full system.

- Precedes user acceptance testing (UAT), where stakeholders validate usability.

- Follows integration testing but runs parallel to performance or security testing in agile environments.

- Acts as a bridge between technical validation and business readiness.

For example, in a banking application, unit tests might verify interest calculation logic, integration tests check database connectivity, and system testing ensures that a customer can successfully transfer money from account A to B, including notifications, logging, and balance updates.

Types of System Testing: A Comprehensive Breakdown

System testing isn’t a single activity—it’s a collection of test types, each targeting different aspects of system behavior. Understanding these types helps teams build a robust test strategy.

Functional System Testing

This type verifies that the system performs its intended functions correctly. It ensures that user actions produce the expected results.

- Validates business workflows like order processing, user registration, or payment gateways.

- Uses test cases derived from requirement specifications.

- Includes positive testing (valid inputs) and negative testing (invalid inputs).

For instance, in an e-commerce platform, functional system testing would confirm that adding items to the cart, applying discounts, and completing checkout all work as expected.

Non-Functional System Testing

While functional testing asks “Does it work?”, non-functional testing asks “How well does it work?” This category includes performance, usability, security, and reliability testing.

- Performance Testing: Measures response time, throughput, and resource usage under load. Tools like Apache JMeter are commonly used.

- Security Testing: Identifies vulnerabilities such as SQL injection or cross-site scripting (XSS). OWASP provides guidelines for secure testing practices.

- Usability Testing: Evaluates user experience, navigation ease, and interface clarity.

For example, a healthcare app must not only process patient records correctly (functional) but also load them quickly (performance) and protect sensitive data (security).

Recovery and Failover Testing

This type assesses how well the system recovers from crashes, hardware failures, or network outages.

- Simulates server crashes or database failures to verify backup restoration.

- Ensures minimal data loss and downtime during recovery.

- Critical for systems requiring high availability, like cloud services or financial platforms.

A telecom billing system, for example, must resume operations without losing transaction data after a power outage.

Key Objectives of System Testing

The goals of system testing go beyond simply finding bugs. It aims to deliver confidence in the software’s readiness for deployment.

Validate End-to-End Business Processes

System testing ensures that complex workflows function correctly across multiple modules. For example, in an ERP system, placing a purchase order should trigger inventory updates, budget checks, and approval workflows.

- Tests integration between front-end, back-end, databases, and third-party APIs.

- Verifies data consistency across subsystems.

- Confirms compliance with business rules and logic.

This level of validation is essential for enterprise applications where failure in one module can cascade across departments.

Ensure Compliance with Requirements

One of the primary objectives is to confirm that the software meets all documented functional and non-functional requirements.

- Test cases are directly mapped to requirement IDs for traceability.

- Helps in audit readiness, especially in regulated industries like finance or healthcare.

- Reduces the risk of post-deployment disputes between clients and developers.

Using tools like Jira or TestRail, teams can track requirement coverage and ensure nothing is missed.

Identify System-Level Defects

Some bugs only appear when the entire system runs together. These include memory leaks, race conditions, or configuration errors.

- Uncovers issues that unit and integration tests miss.

- Reveals problems in data flow, error handling, and exception management.

- Helps improve system stability and reduce production incidents.

For example, a mobile app might work perfectly in isolation but crash when running alongside other apps due to memory constraints—something only system testing can catch.

System Testing vs. Other Testing Types

Understanding the differences between system testing and other testing phases clarifies its unique role in quality assurance.

Unit Testing vs. System Testing

Unit testing focuses on individual components or functions, typically written by developers. In contrast, system testing evaluates the entire application.

- Unit tests are fast, automated, and run frequently during development.

- System tests are broader, slower, and often involve manual or semi-automated execution.

- While unit tests ensure code correctness, system tests ensure system correctness.

Think of unit testing as checking each instrument in an orchestra, while system testing listens to the full symphony.

Integration Testing vs. System Testing

Integration testing verifies interactions between modules or services. System testing takes this further by testing the fully integrated system in a production-like environment.

- Integration testing might check if a login module communicates with the database.

- System testing checks if the user can log in, navigate the dashboard, perform actions, and log out successfully.

- Integration tests are narrower in scope; system tests are holistic.

For microservices architectures, integration testing ensures API contracts are honored, while system testing validates the end-user journey across services.

Acceptance Testing vs. System Testing

Acceptance testing (UAT) is performed by end-users or clients to confirm the software meets business needs. System testing is done by QA teams to verify technical and functional correctness.

- System testing is more technical and comprehensive.

- UAT is more subjective and focused on usability and business value.

- System testing often uncovers defects that would make UAT impossible.

In practice, system testing paves the way for successful UAT by ensuring the system is stable and functional before user involvement.

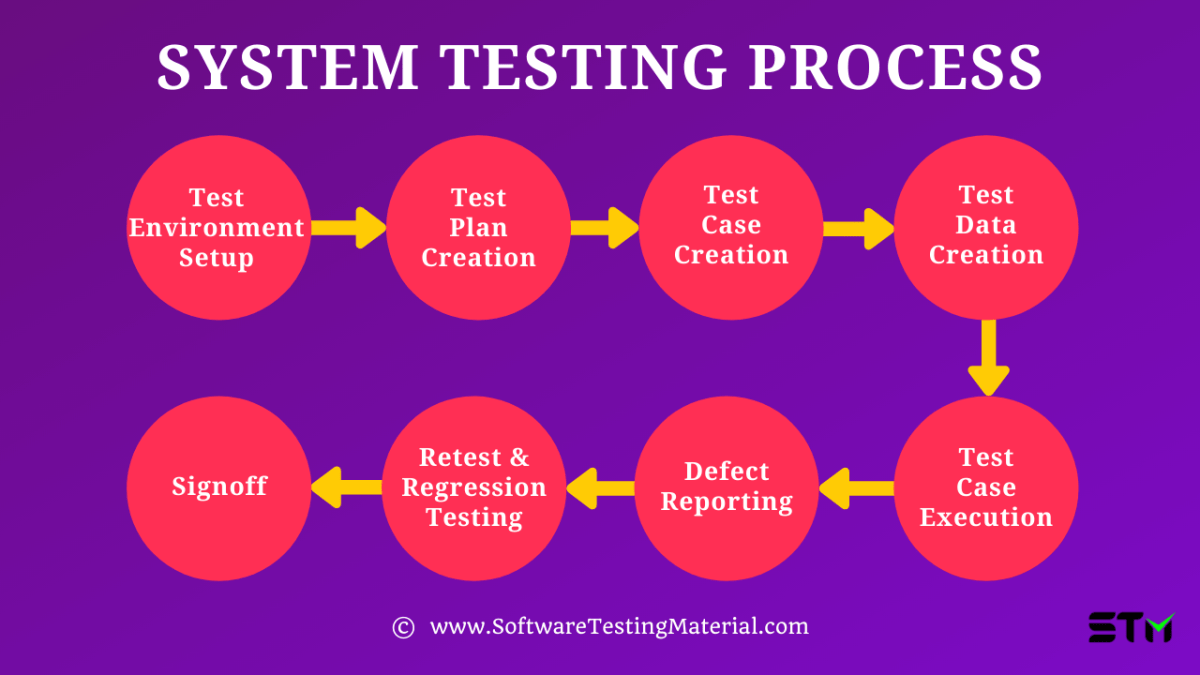

Step-by-Step Process of Conducting System Testing

Executing effective system testing requires a structured approach. Here’s a proven seven-step process.

1. Requirement Analysis

Before writing a single test case, testers must thoroughly understand the software requirements.

- Review functional specifications, user stories, and design documents.

- Clarify ambiguities with business analysts or product owners.

- Identify testable conditions and edge cases.

This foundational step ensures that testing aligns with business goals and reduces rework later.

2. Test Planning

A detailed test plan outlines the scope, resources, schedule, and deliverables for system testing.

- Define test objectives, entry/exit criteria, and risk factors.

- Assign roles and responsibilities to QA team members.

- Select testing tools (e.g., Selenium for automation, Postman for API testing).

The test plan serves as a roadmap and is often shared with stakeholders for approval.

3. Test Case Design

Test cases are created based on requirements, covering both normal and exceptional scenarios.

- Use techniques like equivalence partitioning, boundary value analysis, and decision tables.

- Include preconditions, input data, expected results, and postconditions.

- Prioritize test cases based on risk and business impact.

Well-designed test cases improve test coverage and make execution more efficient.

4. Test Environment Setup

The test environment should mirror production as closely as possible.

- Install the operating system, databases, middleware, and network configurations.

- Populate test data that reflects real-world usage patterns.

- Ensure access controls and security settings are in place.

A poorly configured environment can lead to false positives or missed defects.

5. Test Execution

This is where test cases are run, either manually or through automation.

- Log defects with detailed steps to reproduce, screenshots, and logs.

- Retest fixed bugs to confirm resolution.

- Track progress using dashboards or test management tools.

Execution is iterative—new test cycles begin after major fixes or updates.

6. Defect Reporting and Tracking

Every identified issue must be documented and tracked to closure.

- Use defect tracking tools like Jira, Bugzilla, or Azure DevOps.

- Categorize bugs by severity (critical, major, minor) and priority.

- Maintain clear communication between testers and developers.

Effective defect management ensures transparency and accountability in the QA process.

7. Test Closure and Reporting

Once testing is complete, a final report summarizes the results.

- Include metrics like test coverage, pass/fail rates, and defect density.

- Highlight risks and unresolved issues.

- Provide recommendations for release or further testing.

The test closure report is a key deliverable for stakeholders deciding whether to deploy the software.

Best Practices for Effective System Testing

Following industry best practices enhances the effectiveness and efficiency of system testing.

Start Early and Test Continuously

Although system testing occurs late in the cycle, preparation should begin early.

- Involve QA in requirement reviews to catch ambiguities upfront.

- In agile projects, conduct system testing in each sprint for critical features.

- Use shift-left testing principles to integrate QA earlier in development.

Early involvement reduces last-minute surprises and accelerates delivery.

Use Realistic Test Data

Testing with synthetic or incomplete data can lead to inaccurate results.

- Use anonymized production data or generate realistic datasets.

- Include edge cases like invalid inputs, large volumes, or special characters.

- Avoid hardcoded or placeholder data that doesn’t reflect actual usage.

For example, testing a search function with only “test” as input won’t reveal performance issues under real query loads.

Leverage Automation Strategically

While not all system tests can be automated, repetitive and high-impact tests benefit greatly from automation.

- Automate regression test suites to save time in later cycles.

- Use frameworks like Selenium, Cypress, or Playwright for UI testing.

- Integrate automated system tests into CI/CD pipelines for continuous validation.

However, manual testing remains essential for exploratory, usability, and ad-hoc testing.

Common Challenges in System Testing and How to Overcome Them

Despite its importance, system testing faces several obstacles that can impact quality and timelines.

Unstable Test Environments

Frequent environment crashes or configuration drifts disrupt testing cycles.

- Solution: Use infrastructure-as-code (IaC) tools like Terraform or Ansible to automate environment setup.

- Implement environment monitoring and health checks.

- Reserve dedicated environments for system testing to avoid conflicts.

Stable environments lead to consistent and reliable test results.

Incomplete or Changing Requirements

Unclear or evolving requirements make it difficult to design accurate test cases.

- Solution: Adopt agile practices with frequent collaboration between QA, developers, and product owners.

- Use living documentation tools like Cucumber to keep tests aligned with requirements.

- Apply risk-based testing to focus on stable, high-priority features.

Flexibility and communication are key to managing change effectively.

Time and Resource Constraints

Tight deadlines often lead to rushed testing or skipped test cases.

- Solution: Prioritize test cases based on business impact and defect likelihood.

- Use test automation to increase coverage without increasing effort.

- Negotiate realistic timelines by highlighting risks of inadequate testing.

Quality should never be sacrificed for speed—poor testing leads to costly post-release fixes.

The Role of Automation in System Testing

Automation is transforming how system testing is conducted, especially in fast-paced development environments.

When to Automate System Tests

Not all system tests are suitable for automation. The best candidates include:

- Regression test suites that run frequently.

- High-volume data-driven tests.

- Repetitive, stable workflows with predictable outcomes.

Tests involving human judgment, such as usability or exploratory testing, are better left manual.

Popular Tools for Automated System Testing

A variety of tools support automated system testing across different layers.

- Selenium: For web application UI testing. Learn more.

- Postman: For API and service-level testing.

- JMeter: For performance and load testing.

- Cypress: Modern end-to-end testing framework with built-in debugging.

Choosing the right tool depends on the application architecture, team skills, and testing goals.

Integrating Automation into CI/CD Pipelines

Continuous Integration and Continuous Delivery (CI/CD) rely on automated system tests to validate every code change.

- Run automated system tests after deployment to a staging environment.

- Fail the pipeline if critical tests fail, preventing bad builds from progressing.

- Use tools like Jenkins, GitLab CI, or GitHub Actions to orchestrate test execution.

This integration ensures rapid feedback and maintains software quality at scale.

What is the main goal of system testing?

The main goal of system testing is to evaluate the complete, integrated software system to ensure it meets specified functional and non-functional requirements. It verifies that the application works as expected in a real-world environment before release.

How is system testing different from integration testing?

Integration testing focuses on verifying interactions between modules or services, while system testing evaluates the entire system as a whole. System testing includes both functional and non-functional aspects and is performed in a production-like environment.

Can system testing be automated?

Yes, many aspects of system testing can be automated, especially regression, API, and performance tests. However, manual testing is still essential for exploratory, usability, and ad-hoc testing scenarios.

What are the common types of system testing?

Common types include functional testing, performance testing, security testing, recovery testing, usability testing, and compatibility testing. Each type targets a specific aspect of system behavior.

When should system testing be performed?

System testing should be performed after integration testing and before user acceptance testing (UAT). It begins once all modules are integrated and the system is stable enough for end-to-end validation.

System testing is the cornerstone of software quality assurance. It goes beyond isolated component checks to validate the entire application in a realistic environment. From functional correctness to performance, security, and recovery, system testing ensures that software is not just built right, but also built to last. By following a structured process, leveraging automation wisely, and addressing common challenges, QA teams can deliver reliable, high-quality software that meets user expectations. Whether you’re testing a mobile app, enterprise system, or cloud service, mastering system testing is essential for success in today’s digital world.

Further Reading: