System Haptics: 7 Revolutionary Insights That Will Transform Your Tech Experience

Ever wondered how your phone buzzes just right when you type or how game controllers seem to ‘push back’ during intense action? Welcome to the world of system haptics—where touch meets technology in the most immersive way possible.

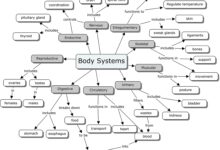

What Are System Haptics?

System haptics refers to the integrated feedback mechanisms in electronic devices that simulate the sense of touch through vibrations, forces, or motions. Unlike basic vibration motors from the early 2000s, modern system haptics are engineered for precision, responsiveness, and realism. They’re not just about buzzing—they’re about communicating with your fingertips.

The Science Behind Touch Feedback

Haptics, derived from the Greek word “haptikos” meaning “able to touch,” is a multidisciplinary field combining engineering, psychology, and computer science. System haptics specifically refers to the software-hardware ecosystem that delivers tactile feedback in consumer electronics. This includes everything from the actuator (the physical motor) to the control algorithms that dictate how and when it vibrates.

- Actuators convert electrical signals into mechanical motion.

- Control systems use timing, frequency, and amplitude to create nuanced sensations.

- Sensors often feed real-time data to adjust haptic responses dynamically.

“Haptics is the silent language of devices—when done right, you don’t notice it, but you’d miss it instantly if it were gone.” — Dr. Lynette Jones, MIT Senior Research Scientist

Evolution from Simple Vibration to Advanced Feedback

In the early days, mobile phones used eccentric rotating mass (ERM) motors—simple spinning weights that created a blunt, on-or-off vibration. These were effective for alerts but lacked subtlety. The real leap came with linear resonant actuators (LRAs), which vibrate in a single direction with higher efficiency and faster response times.

Today’s system haptics go beyond LRAs. Devices like the iPhone use Apple’s Taptic Engine, a proprietary system that delivers context-aware feedback. For example, when scrolling through a list, the haptic engine produces a series of subtle taps that mimic the feeling of moving over physical ridges—enhancing the illusion of real interaction.

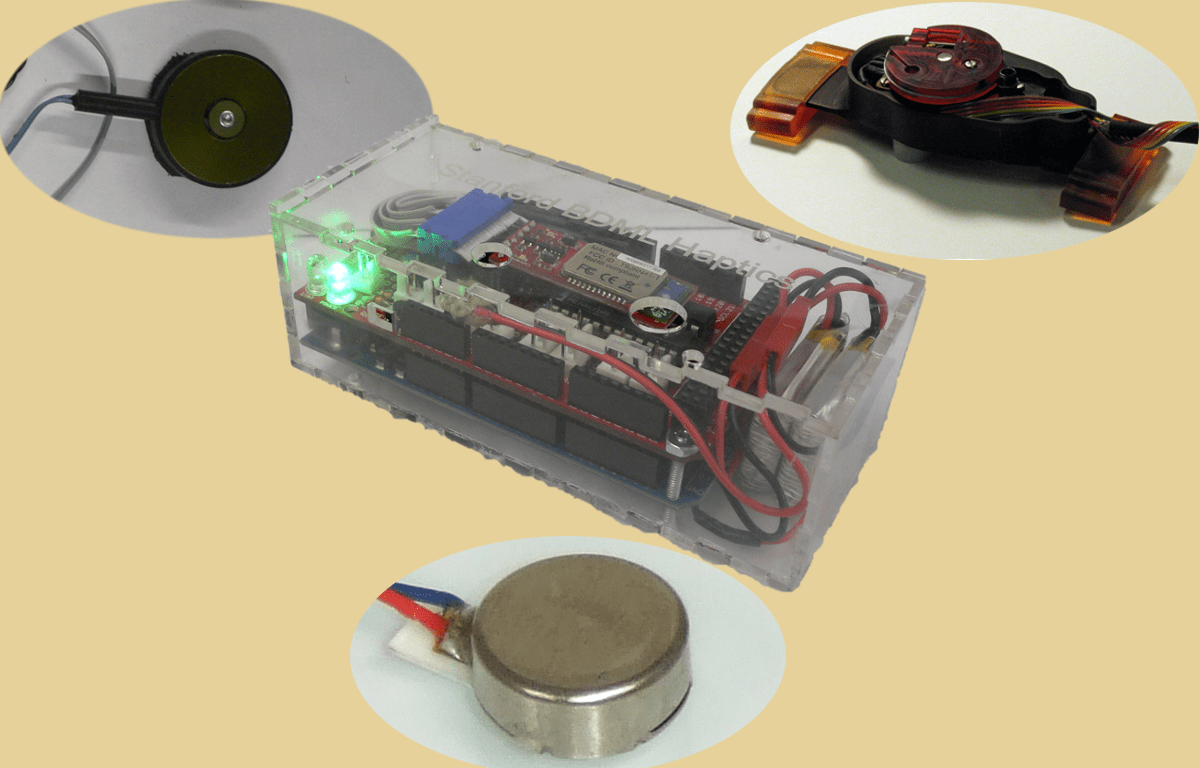

How System Haptics Work: The Core Components

To truly appreciate system haptics, you need to understand the three pillars that make them function: hardware, software, and sensory integration. These components work in harmony to create a seamless tactile experience.

Hardware: The Physical Engine of Touch

The hardware in system haptics includes actuators, drivers, and sensors. The most common actuators today are:

- Linear Resonant Actuators (LRAs): Use a magnetic coil to move a mass back and forth along a single axis. Known for fast start/stop times and energy efficiency.

- Piezoelectric Actuators: Use materials that expand or contract when voltage is applied. These are thinner and more precise than LRAs, ideal for slim devices like smartwatches.

- Electroactive Polymers (EAPs): Still in experimental stages, these materials mimic muscle movement and could enable soft, lifelike haptic feedback in the future.

Companies like Borrelly and TDK are leading the charge in developing next-gen haptic actuators that are smaller, faster, and more energy-efficient.

Software: The Brain Behind the Buzz

Hardware alone can’t create meaningful feedback. Software defines the ‘language’ of haptics. Operating systems like iOS and Android include haptic APIs (Application Programming Interfaces) that allow developers to trigger specific tactile patterns.

For example, Apple’s UIFeedbackGenerator lets app developers use predefined haptic types like ‘impact,’ ‘notification,’ or ‘selection.’ These aren’t random vibrations—they’re carefully tuned to match user actions.

- Selection: A soft tap when toggling a switch.

- Impact: A sharp pulse when a game character hits a wall.

- Success/Error: Distinct patterns for confirming or rejecting input.

This level of control transforms system haptics from a gimmick into a functional interface layer.

Sensory Integration: Bridging the Digital and Physical

The human sense of touch is incredibly sensitive. We can detect vibrations as small as 0.1 microns. System haptics leverage this sensitivity by syncing tactile feedback with visual and auditory cues. This multisensory alignment creates a more convincing illusion of physical interaction.

For instance, when you press a virtual button on an iPhone keyboard, the screen animation, the sound, and the haptic tap occur in perfect sync. Your brain interprets this as a real button press—even though there’s no physical movement.

Applications of System Haptics Across Industries

System haptics are no longer limited to smartphones. They’re revolutionizing industries by enhancing user experience, improving accessibility, and enabling new forms of interaction.

Smartphones and Wearables

Smartphones are the most widespread platform for system haptics. Apple’s Taptic Engine, introduced in the iPhone 6S, set a new standard. It enabled features like 3D Touch (pressure-sensitive input) and haptic touch feedback for virtual keyboards.

Wearables like the Apple Watch take it further. The watch uses haptics to deliver silent notifications—tapping your wrist to alert you of a message or guiding you with directional taps during navigation. This is especially useful in noisy environments or for users with hearing impairments.

- Haptic alerts reduce reliance on sound and visual cues.

- Customizable feedback patterns improve personalization.

- Health apps use haptics for breathing exercises and mindfulness reminders.

Gaming and Virtual Reality

In gaming, system haptics are transforming immersion. The PlayStation 5’s DualSense controller is a landmark example. It features adaptive triggers and advanced haptics that simulate everything from the tension of a bowstring to the rumble of a dirt bike.

According to Sony, the DualSense uses voice coil actuators—similar to those in speakers—to produce highly detailed vibrations. This allows developers to create dynamic feedback that changes based on in-game actions.

“The DualSense doesn’t just vibrate—it tells a story through touch.” — IGN Review, 2020

In virtual reality (VR), system haptics are critical for presence. Devices like the HaptX Gloves use microfluidic technology to deliver force feedback and texture simulation. Users can ‘feel’ virtual objects, enhancing training simulations, medical education, and gaming.

Automotive and Driver Assistance

Modern cars are integrating system haptics into steering wheels, seats, and pedals. Haptic feedback in steering wheels can alert drivers to lane departures or collision risks through subtle pulses—more intuitive than beeping sounds.

Some luxury vehicles use haptic pedals that resist acceleration when approaching a speed limit or when traffic is detected ahead. This creates a ‘phantom brake’ sensation, encouraging safer driving without taking control away from the driver.

- Haptic seat vibrations can signal blind-spot warnings.

- Touchscreen dashboards use haptics to simulate button clicks.

- Future autonomous vehicles may use haptics to communicate handover timing.

System Haptics in Accessibility and Inclusive Design

One of the most impactful uses of system haptics is in accessibility. For users with visual or hearing impairments, tactile feedback can be a primary mode of interaction.

Assisting the Visually Impaired

Smartphones with system haptics can provide navigational cues through patterned vibrations. Apps like Microsoft’s Soundscape use 3D audio and haptic pulses to guide users through cities. A double tap on the left wrist might mean “turn left in 10 meters,” while a long pulse indicates a point of interest.

Braille displays are also evolving with haptic technology. Refreshable braille devices use piezoelectric pins that rise and fall to form characters. When combined with system haptics, these devices can deliver dynamic, real-time tactile information.

Supporting Users with Hearing Loss

Haptics serve as a silent communication channel. Smartwatches can vibrate in unique patterns to represent different contacts or message types. For example, a short-long-short vibration might mean “your partner is calling,” while a continuous pulse could signal an emergency alert.

This is especially valuable in environments where sound isn’t practical—like meetings, classrooms, or public transit. The Apple Watch’s Sound Recognition feature uses haptics to alert users to important sounds like smoke alarms or doorbells.

Enhancing Cognitive and Motor Skills

System haptics are being used in therapeutic settings to improve motor coordination and cognitive processing. Stroke patients, for example, use haptic gloves in rehabilitation to relearn hand movements through guided resistance and feedback.

Children with autism spectrum disorder (ASD) often respond well to tactile stimuli. Haptic wearables can provide calming vibrations during anxiety episodes, acting as a non-intrusive emotional regulation tool.

The Role of AI in Advancing System Haptics

Artificial intelligence is pushing system haptics into new frontiers. Instead of relying on pre-programmed patterns, AI enables adaptive, context-aware feedback that learns from user behavior.

Machine Learning for Personalized Feedback

AI models can analyze how users interact with their devices—how hard they press, how fast they scroll, their preferred haptic intensity—and adjust feedback in real time. For example, a user who types aggressively might receive stronger haptic confirmation, while a light typist gets subtler taps.

Google’s AI research team has explored using neural networks to generate haptic patterns that match visual content. Imagine watching a video of rain and feeling corresponding droplet-like vibrations on your phone.

Predictive Haptics in User Interfaces

Future system haptics could anticipate user actions. If you’re about to press a delete button, the device might emit a slight resistance or warning pulse. This ‘haptic guardrail’ could prevent accidental actions without blocking functionality.

AI can also optimize battery usage by predicting when haptics are necessary. Instead of vibrating for every notification, the system learns your habits and only activates feedback during high-priority moments.

Haptic Synthesis: Creating ‘Feel’ from Data

One of the most exciting developments is haptic synthesis—converting visual or auditory data into tactile experiences. Researchers at the University of Chicago have developed algorithms that translate textures from images into haptic patterns.

For example, a photo of sandpaper could trigger a rough, gritty vibration, while silk produces a smooth glide. This could revolutionize e-commerce, allowing users to ‘feel’ fabrics before buying online.

Challenges and Limitations of Current System Haptics

Despite rapid progress, system haptics still face technical and perceptual challenges that limit their potential.

Battery Consumption and Efficiency

Haptic actuators, especially high-fidelity ones, consume significant power. Continuous use can drain a smartphone battery by up to 15%. Engineers are working on low-power actuators and predictive haptic scheduling to mitigate this.

Piezoelectric actuators are more energy-efficient than LRAs, but they’re also more expensive and harder to manufacture at scale. Balancing performance, cost, and battery life remains a key challenge.

Standardization and Fragmentation

Unlike audio or video, there’s no universal standard for haptic feedback. Each manufacturer uses proprietary systems—Apple’s Taptic Engine, Samsung’s Vibration Motor, Sony’s DualSense haptics. This fragmentation makes it difficult for developers to create consistent experiences across devices.

Organizations like the World Wide Web Consortium (W3C) are working on haptic API standards, but adoption is slow. Without standardization, the full potential of system haptics remains locked behind brand silos.

User Perception and Overstimulation

Not all users appreciate haptics. Some find constant vibrations distracting or even anxiety-inducing. Overuse of haptic feedback can lead to ‘haptic fatigue,’ where users become desensitized or annoyed.

Designers must strike a balance between usefulness and intrusiveness. Context-aware haptics—only activating when necessary—are crucial. User customization options (intensity, duration, pattern) also help ensure inclusivity.

The Future of System Haptics: What’s Next?

The next decade will see system haptics evolve from simple feedback tools to essential components of human-computer interaction.

Widespread Adoption in Smart Home Devices

Imagine your smart doorbell vibrating your wristwatch, or your oven sending a gentle pulse when dinner is ready. As IoT devices become more integrated, system haptics will provide seamless, non-disruptive alerts across the home.

Companies like Nest and Amazon Alexa are already exploring haptic-enabled smart displays and wearables for home control.

Haptics in Augmented Reality (AR)

While VR uses haptics for immersion, AR can use them for utility. AR glasses could pair with haptic wristbands to guide users through complex tasks—like assembling furniture or performing surgery—with tactile cues.

Microsoft’s HoloLens team has experimented with haptic feedback for spatial navigation. A pulse on the left wrist could mean “move left,” while a double tap signals confirmation. This reduces reliance on visual overlays, keeping the user’s field of view clear.

Bio-Integrated Haptics and Wearable Implants

The ultimate frontier is bio-integrated haptics—devices that interface directly with the nervous system. Researchers are developing haptic tattoos, epidermal sensors, and even neural implants that deliver touch sensations directly to the brain.

For example, a team at the University of Texas created a temporary ‘smart tattoo’ that vibrates in response to GPS signals, helping users navigate without looking at a phone. In the future, such technologies could restore touch to amputees using prosthetic limbs with haptic feedback.

System Haptics: A Key Pillar of Human-Centered Design

As technology becomes more invisible, the way we interact with it must become more intuitive. System haptics represent a shift from visual-dominant interfaces to multisensory experiences that respect human biology.

They’re not just about making devices feel better—they’re about making them feel *right*. When a notification tap matches the weight of a real button, or a game controller mimics the recoil of a gun, we bridge the gap between digital and physical.

“The best interfaces are those you don’t think about. System haptics are quietly becoming one of the most important tools in achieving that.” — Don Norman, Author of ‘The Design of Everyday Things’

From accessibility to gaming, from healthcare to automotive safety, system haptics are redefining how we connect with technology. As AI, materials science, and neuroscience converge, the future of touch is not just reactive—it’s intelligent, adaptive, and deeply human.

What are system haptics?

System haptics are advanced tactile feedback systems in electronic devices that use vibrations, forces, or motions to simulate touch. They go beyond simple buzzing to deliver precise, context-aware sensations that enhance user interaction.

How do system haptics improve user experience?

They provide intuitive feedback that aligns with visual and auditory cues, making interactions feel more natural and responsive. For example, haptic feedback on a smartphone keyboard confirms key presses, reducing errors and improving typing speed.

Which devices use system haptics?

Smartphones (like iPhones with Taptic Engine), smartwatches, gaming controllers (like PS5 DualSense), VR gloves, and automotive systems all use system haptics to enhance functionality and immersion.

Can system haptics help people with disabilities?

Yes. They assist visually impaired users with navigation cues, help hearing-impaired individuals receive silent alerts, and support motor rehabilitation through guided tactile feedback in therapy devices.

What’s the future of system haptics?

The future includes AI-driven adaptive feedback, bio-integrated haptic implants, standardized APIs, and broader use in AR, smart homes, and healthcare—making touch a central part of digital interaction.

System haptics have evolved from basic vibrations into a sophisticated language of touch. They enhance usability, accessibility, and immersion across smartphones, wearables, gaming, and beyond. With advancements in AI, materials, and neuroscience, the next generation of haptic technology will not only simulate touch but understand it—adapting to individual needs and contexts. As we move toward more intuitive, human-centered design, system haptics will play a crucial role in making technology feel less like a machine and more like a natural extension of ourselves.

Further Reading: